Healthcare leaders increasingly view artificial intelligence (AI) as a key lever for solving systemic problems, from clinician burnout and staffing shortages to administrative inefficiencies and population health. But the financial promise of AI can’t be separated from its risk profile. As adoption accelerates, many organizations are sleepwalking into a costly trap: deploying AI without the oversight needed to protect operations, reputation, or revenue. These gaps aren’t just compliance issues; they are business vulnerabilities that can directly undermine return on investment, escalate liability, and erode long-term value.

The consequences of inaction are already surfacing, with some health systems facing regulatory scrutiny and operational disruptions tied to poorly governed tools. This was evident in a recent case where a large provider came under investigation after using an AI system that allegedly overrode clinical judgment, highlighting the real-world risks of insufficient oversight. As a result, forward-looking organizations are realizing that responsible AI governance is not a tech issue; it is a leadership imperative.

Why AI Governance Must Come First

The healthcare sector is energized by AI’s potential, but practical readiness to implement these technologies remains limited. According to Drummond’s Securing AI Risk: Creating Adoption-Ready Health IT Solutions report:

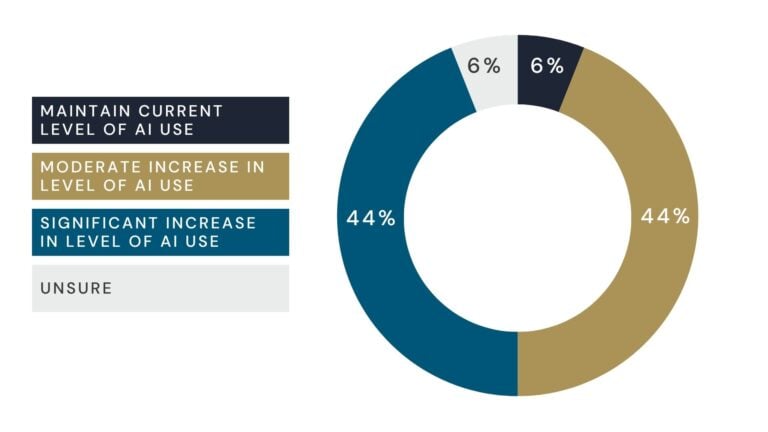

88% of healthcare providers and health IT vendors expect their AI use to grow within two years.

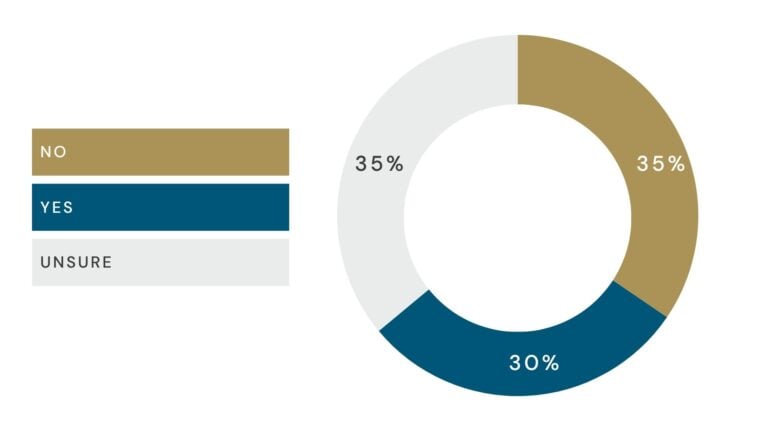

However, 70% of participants reported that they did not, or were unsure if they, had a formal AI risk governance program.

Click here to dive deeper into the Drummond AI Risk in Health IT market study.

This disconnect between ambition and preparedness creates a precarious environment. Many pilots stall due to unresolved concerns about data readiness, security, and compliance. And the tools that do move forward without proper vetting can generate hallucinated results, disrupt clinical workflows, or violate HIPAA regulations. Consequently, this unsanctioned use of generative AI in patient documentation has already triggered healthcare concerns around data exposure and regulatory risk.

Furthermore, the issue of satisfying unmet governance requirements should not just be looked at as regulatory, but strategic as well. Unchecked enthusiasm without the supporting infrastructure exposes healthcare organizations to cascading business risks, from patient harm to liability and loss of trust. Moreover, bridging this gap requires healthcare leaders to move beyond experimentation and embed governance, accountability, and staff enablement into their AI strategy from the start.

With this clear gap between enthusiasm and readiness, the question now becomes: Who is responsible for managing these risks and closing this divide?

Responsibility Must Be Shared

Responsibility for AI oversight cannot rest solely on developers. While they are instrumental in designing systems with safety, security, and compliance in mind, they are only one piece of a much larger puzzle. The increasingly widespread integration of AI across the healthcare ecosystem means that every stakeholder (providers, payers, and technology vendors) must take active responsibility for governance.

Why? Because AI in healthcare rarely operates in a silo. An unchecked tool used by a provider can compromise patient privacy, influence payer decisions, or impact a broader health network through cascading data errors. Likewise, an inaccurate algorithm embedded in a payer’s system can affect reimbursement decisions that alter clinical workflows or even patient access to care. The ripple effects are systemic, not isolated. Negligence in one corner of the healthcare sector can quickly undermine the entire fabric of trust and operational continuity.

This interconnectedness makes shared responsibility not just preferable, but essential. However, shared responsibility should not be mistaken for a lack of delineated responsibility. While everyone must play a role in governance, what they are responsible for will vary based on their position in the healthcare ecosystem, whether developer, provider, or payer. Clearly defining these roles is just as important as acknowledging shared accountability. Without this clarity, organizations risk falling into what experts call “responsibility diffusion”, a scenario where everyone assumes someone else is in charge. In complex environments like healthcare, this ambiguity can lead to oversight failures, delayed mitigation, and systemic vulnerabilities.

This means healthcare providers must establish rigorous internal governance structures, even when tools are vendor-built. It means payers must vet AI tools for fairness, transparency, and compliance before using them in coverage decisions. Additionally, developers must commit to transparency, auditability, and collaboration with their clients, not just as a compliance gesture but as a strategic advantage.

Ultimately, the organizations that treat AI risk management as everyone’s job, not someone else’s problem, will be best positioned to use these technologies effectively and sustainably. It is not enough to “hope” that a vendor has built in safeguards. Accountability must be intentional, distributed, and embedded into every stage of AI use, from procurement and onboarding to performance monitoring and long-term evaluation.

Strengthening Capacity Through Strategic Support

For many healthcare leaders, building robust AI governance can feel daunting. Budget constraints, competing priorities, and limited in-house expertise make it difficult to implement oversight programs at scale. However, developing internal capacity does not mean adding dozens of new roles. It means establishing clear responsibilities across departments (compliance, clinical, legal, and IT), and creating shared accountability within one’s organization.

This is where strategic external support can play a vital role. Third-party services specializing in healthcare AI oversight can help bridge the internal capacity gap. From vetting vendors to auditing tools and stress-testing high-risk scenarios, these partners bring technical depth and regulatory expertise that supports sustainable scaling.

Leveraging third-party expertise aligned with frameworks like the NIST AI Risk Management Framework can help close internal capacity gaps across the health IT ecosystem. These services include comprehensive AI risk assessments, fairness audits, and independent validation of vendor tools, all of which equip developers, payers, and providers with the assurance needed to meet critical standards for safety, transparency, and compliance.

Furthermore, by working with outside experts, healthcare organizations can adopt a proactive governance model that strengthens internal processes while minimizing the operational burden on staff. This balanced approach can reduce the likelihood of missteps that could derail return on investment.

Key Takeaway: Long-Term Success Depends on Sustainable AI

ROI in healthcare AI is often judged by immediate gains like speed or cost savings. But real value comes from solutions built for reliability and risk management. A tool that saves time but increases the chance of data breaches or compliance issues ultimately undermines its benefit.

The organizations that succeed with AI prioritize durability, choosing tools with audit logs, anonymization, explainability dashboards, and clear consent management. These features protect long-term performance and trust, going beyond mere regulatory compliance.